Executive Summary: Azure Arc-enabled Kubernetes

An overview on Azure Arc-enabled Kubernetes. Understanding the capabilities and pricing model.

Why Azure Arc-enabled Kubernetes

Azure Arc-enabled Kubernetes allows you to manage, secure, and observe Kubernetes clusters across on-premises, multi-cloud, and edge environments using Azure’s unified management tools. It provides consistent and centralized observability, security, and policy enforcement across all your clusters in their respective environments, enhancing operational efficiency for enteprise platform teams.

Capabilities of Azure Arc-enabled Kubernetes

Inventory Management

Does your platform team know how many clusters are deployed across the enteprise? Are they upgraded to the latest Kubernetes version?

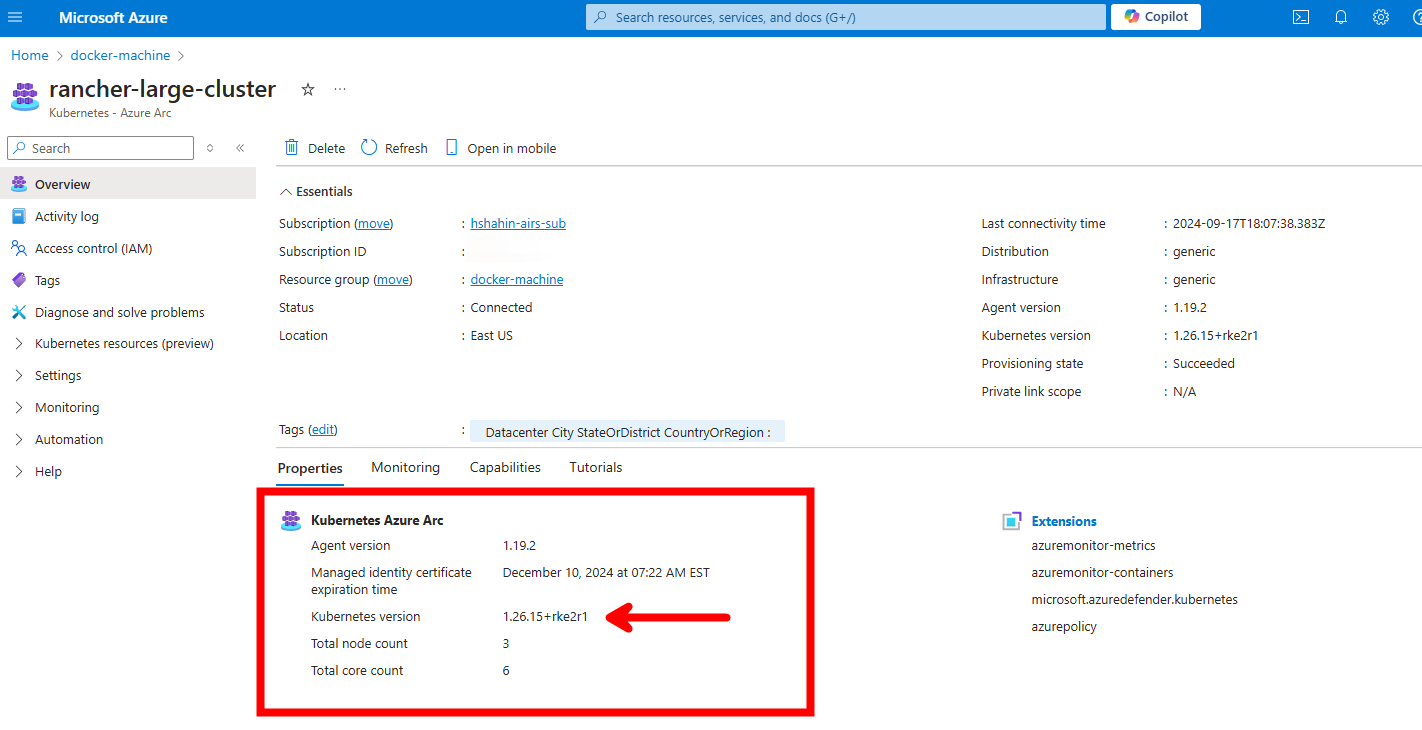

When using Azure Arc-enabled Kubernetes, the first step is connecting the cluster to Azure Arc. Azure Arc pods are deployed to your cluster and establish a connection outbound to Azure. From here, your cluster is officially onboarded to Azure Arc and is viewable in Azure as if it is a native Azure Service.

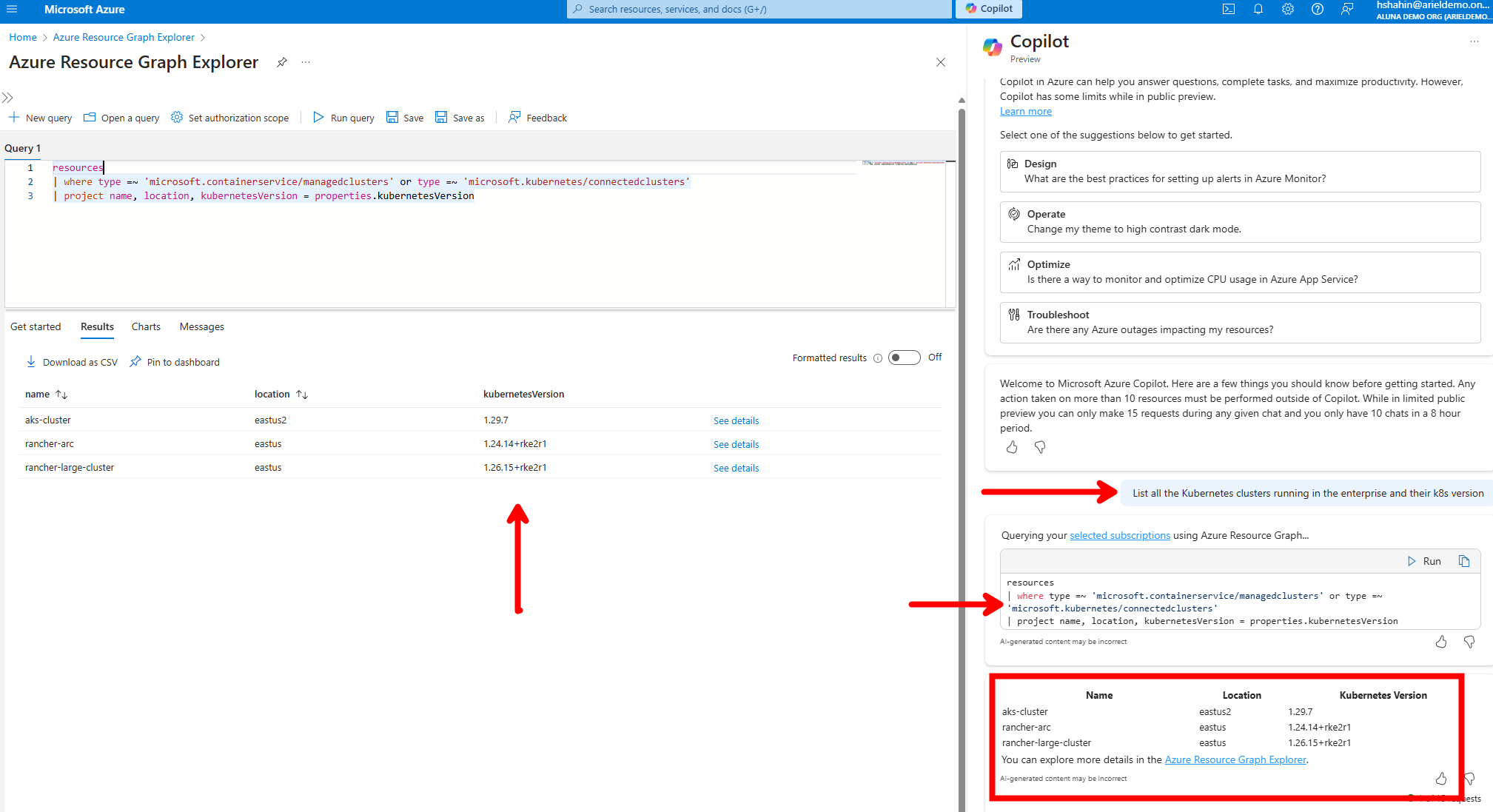

A great benefit to just taking this step is that you can now use Azure Resource Graph and Microsoft Copilot in Azure to query your full inventory.

For example, in my environment I have 3 clusters deployed, 1 AKS Cluster and 2 Rancher Clusters connected to Azure through Arc. I asked Azure Copilot "List all the Kubernetes clusters running in the enterprise and their k8s version" and I got the result below:

Extensions

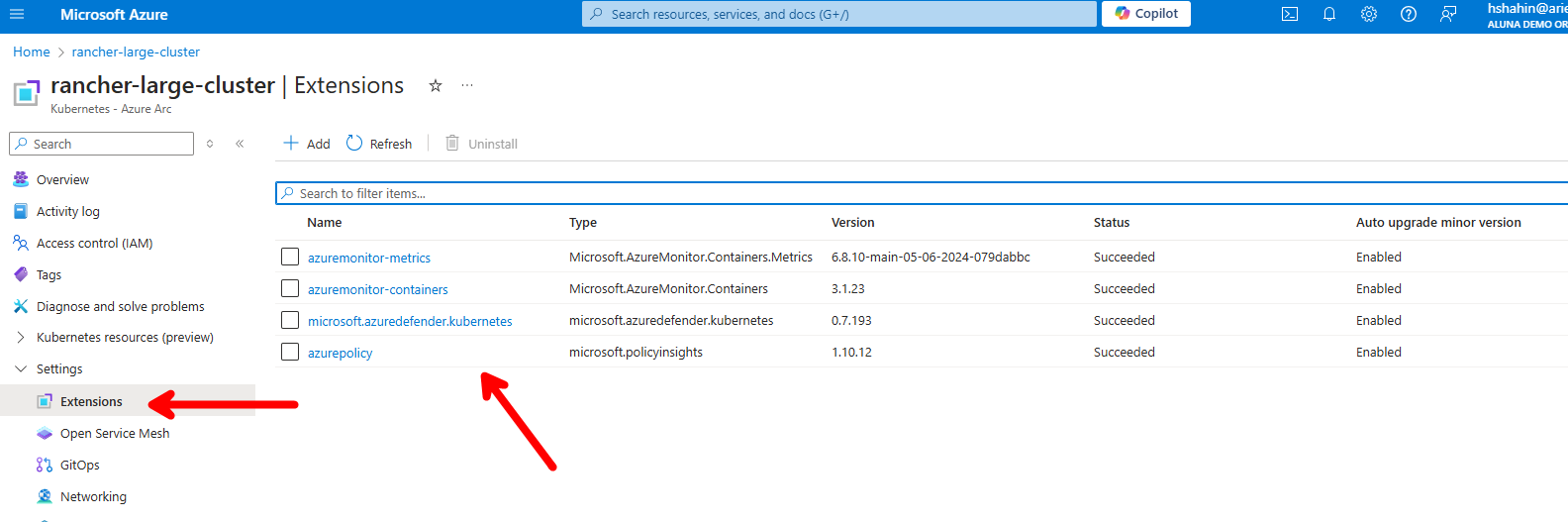

After connecting a cluster to Azure Arc, you can install extensions to really bring Azure capabilities down to the cluster. Similar to AKS add-ons, these extensions bring down native capabilities you will want to effectively govern, monitor, and secure the cluster.

The full list of extensions are listed on the docs, but the ones installed here in particular give the most benefit to platform teams that want to use Arc as a way to manage all their clusters in a centralized, consistent way.

Azure Monitor Container Insights and Metrics

Are any pods currently hittings errors in production, causing them to continuously restart? Are there any clusters where nodes are under-utilized so that we can achieve better cost savings?

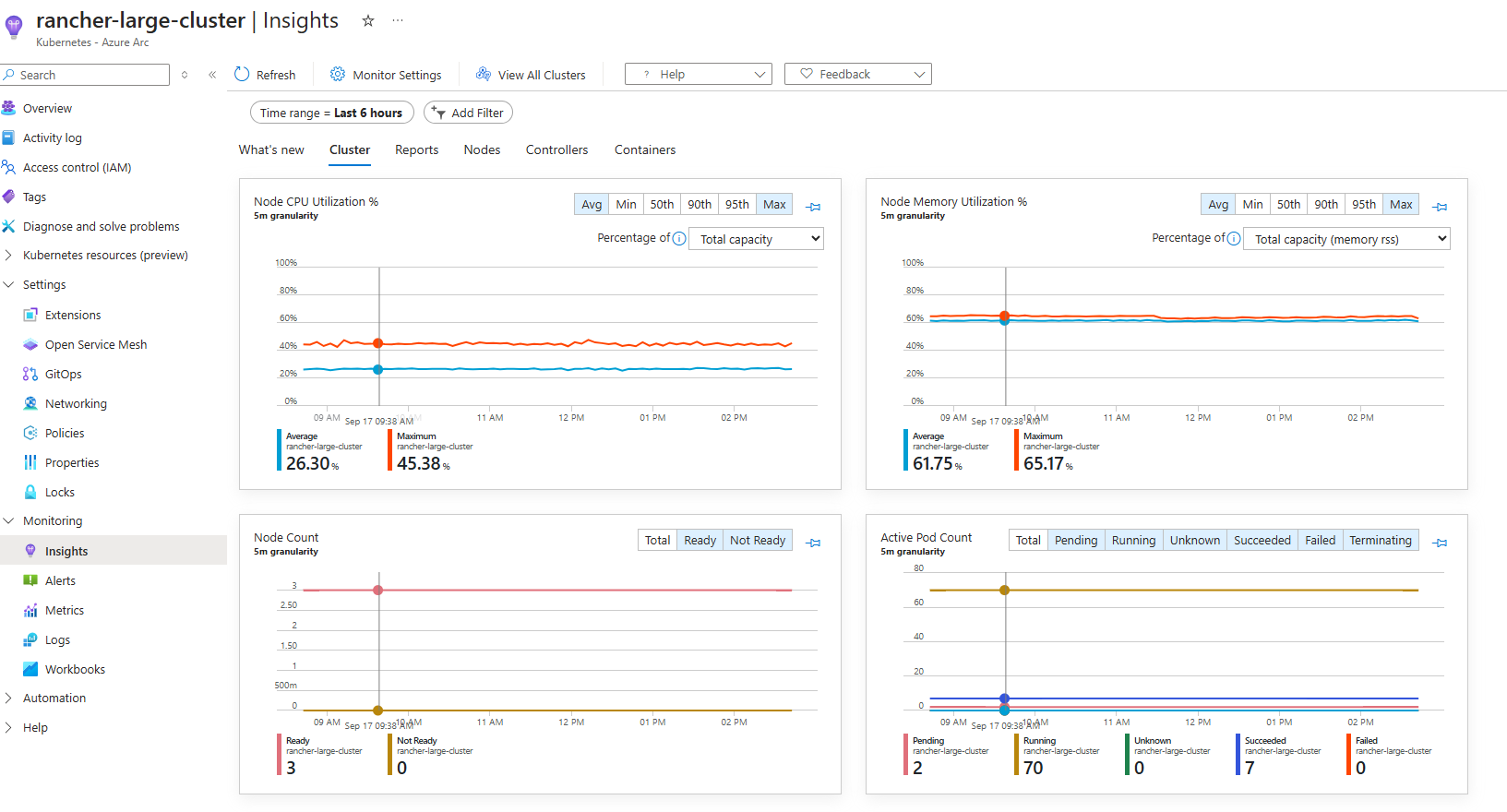

Azure Monitor Container Insights provides visibility into the performance and logs of workloads deployed on the Kubernetes cluster. Use this extension to collect memory and CPU utilization metrics from controllers, nodes, and containers as well as stderr/stdout from containers running on the cluster.

A key benefit here is that you can now use the same dashboards and queries to get a health status across your estate, not just a per-cluster basis.

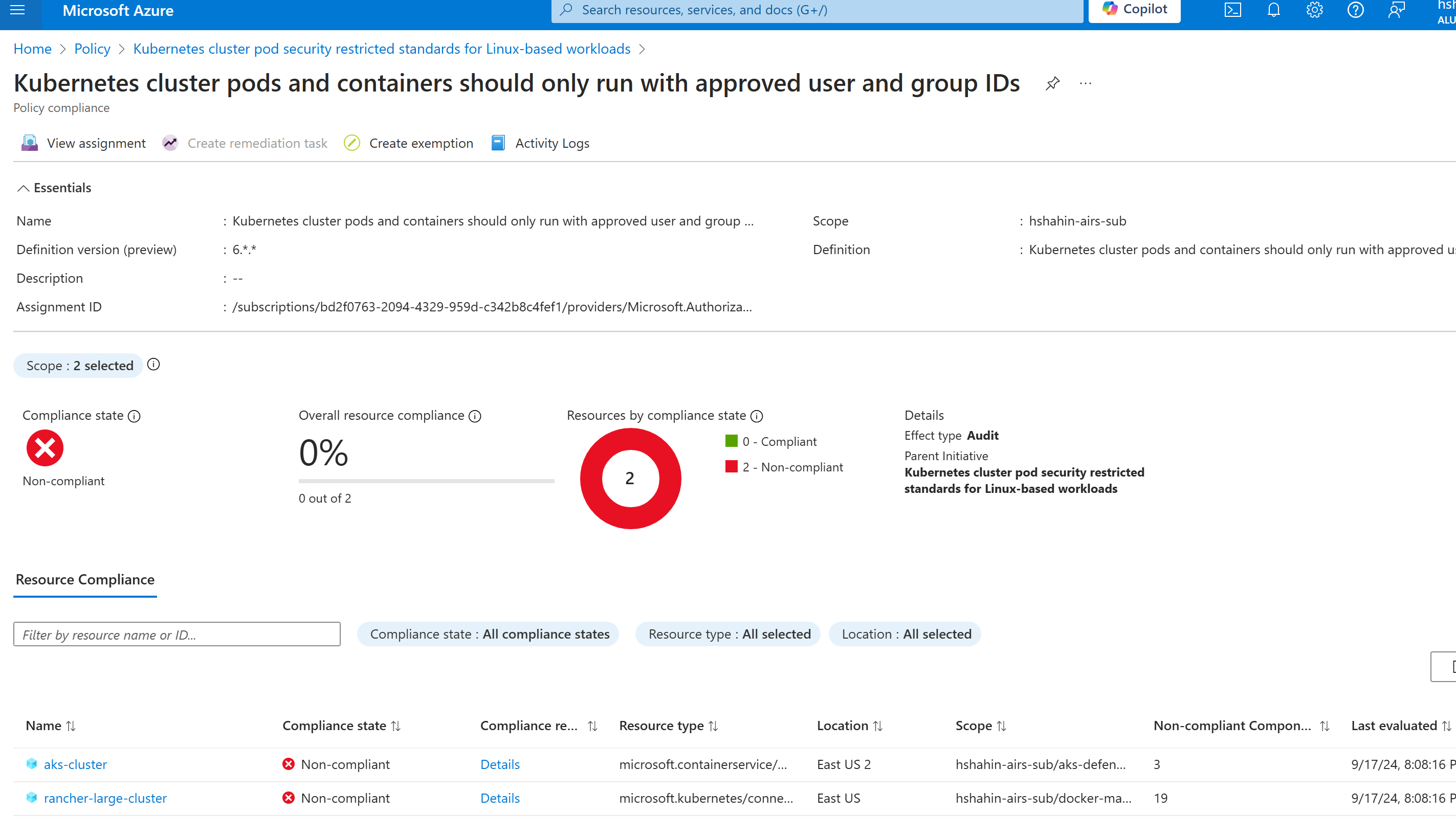

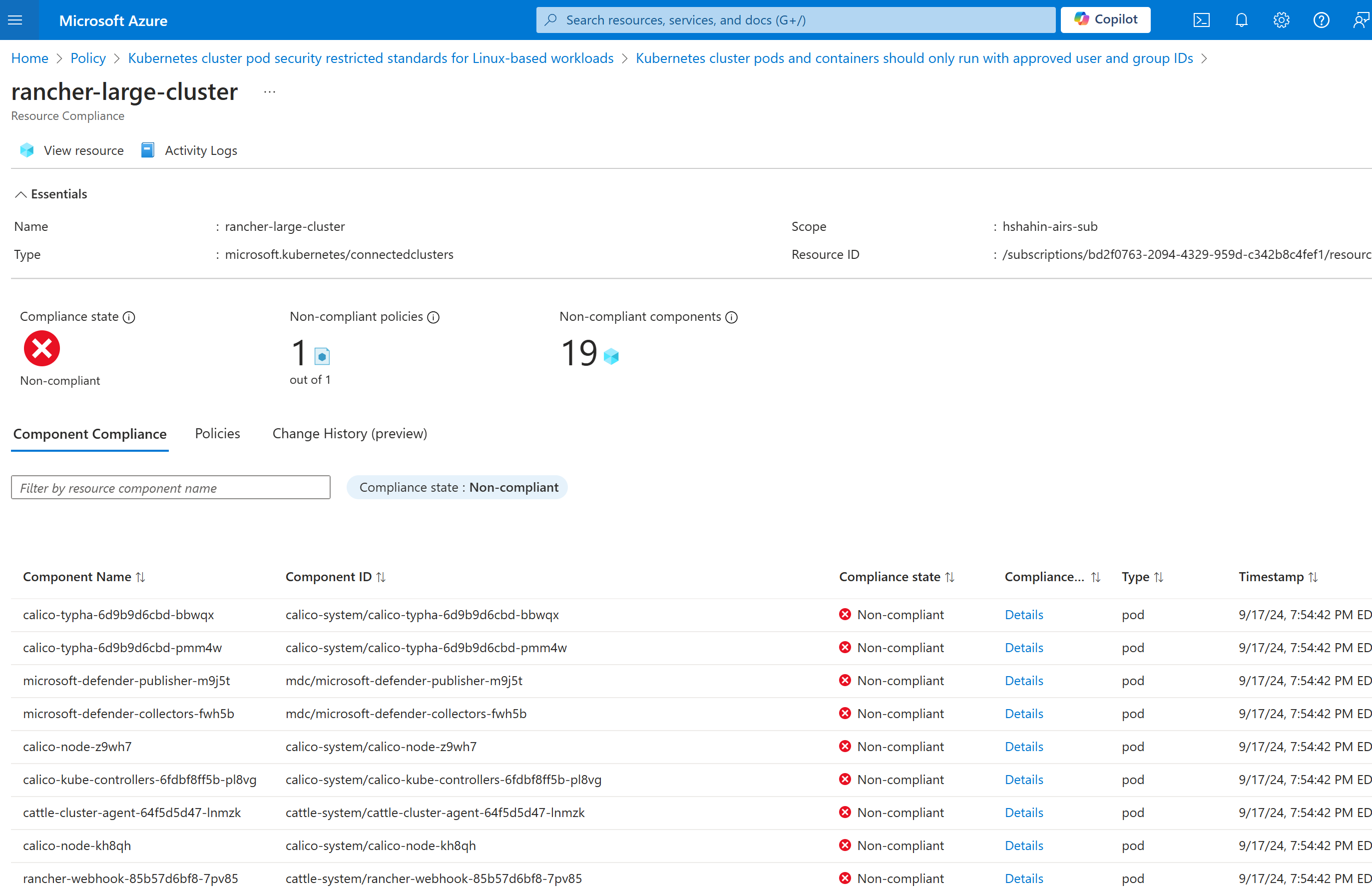

Azure Policy

Are there pods running with privileges? Are containers being sourced from trusted registries? Are liveness and readiness probes being configured?

Azure policy allows you to govern and ensure your cluster is in compliance with security and deployment best practices. Policies can be configured in an audit mode to report back on compliance and then even further in deny mode to proactively block activities that go against configured policies.

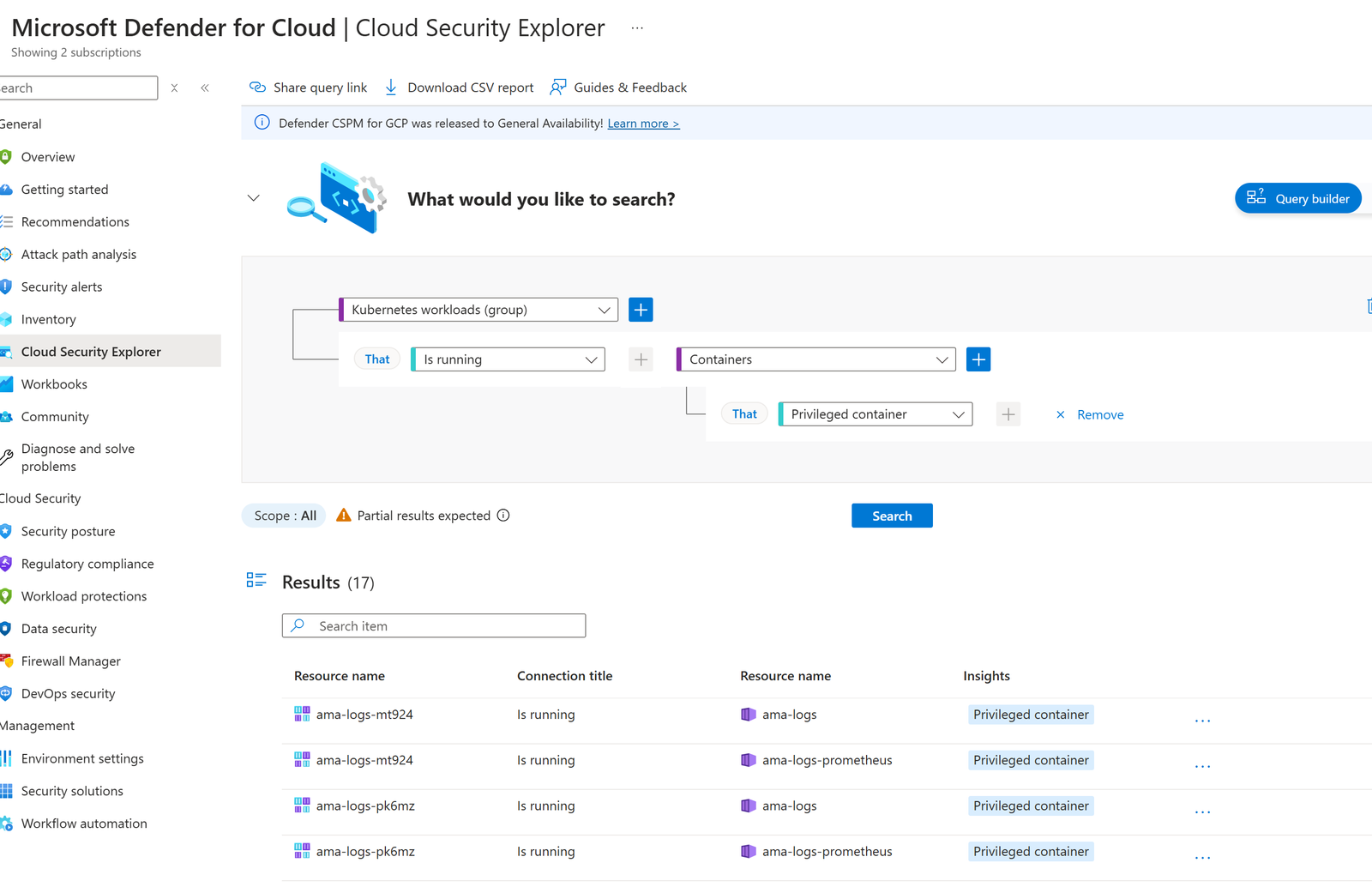

Microsoft Defender for Containers

How do I monitor running processes to ensure no compromises occur in production? Are there any risks in my current deployment that I can remediate proactively?

Defender for Containers alerts on runtime threats detected and allows you to proactively mitigate risks detected in the environment. A full blog on Securing Kubernetes with Defender for Containers covers all the details and the same capabilities can be brought to all clusters in all environments through Arc.

Pricing

Ultimately, pricing depends on the Azure Arc extension being deployed. Think of connecting a cluster to Azure Arc as step 1, and then installing extensions that your enterprise desires as step 2.

Connecting Kubernetes clusters to Azure Arc is free. The cluster will appear in the Azure Portal and can be queried for inventory management using Azure Resource Graph.

From there, we can evaluate on an extension by extension basis.

Azure Policy Extension

Free, no additional charge.

Defender for Containers Extension

Pricing is simple: $7/core/month. No additional charges for the data stored in Defender.

Example for 12-Node, 8 Core Cluster:

- 12-Node Cluster, 8 Cores per Node

- 12 nodes * 8 cores/node = 96 Total Cores)

- 96 cores * $7/core/month = $672/month

- Total: $672/month

Azure Monitor Container Insights and Metrics

Like other logging solutions in Azure, this is dependent on the amount of data that is ingested into Azure Monitor. Logs from the cluster are ingested into Log Analytics and metrics are scraped and stored in Managed Prometheus on Azure.

- Logs (Log Analytics): $2.30 per GB, 31 days of retention included

- Metrics (Managed Prometheus): $0.16/10 million samples ingested, metric queries priced at $0.001/10 million samples processed. Data is retained for 18 months at no extra charge.

The values above may not be useful, so let's understand pricing through two simulated examples, first using a real Rancher Cluster I deployed and next through the Pricing Calculator for a simulated 12-Node Cluster:

Example for 3-Node Rancher Cluster

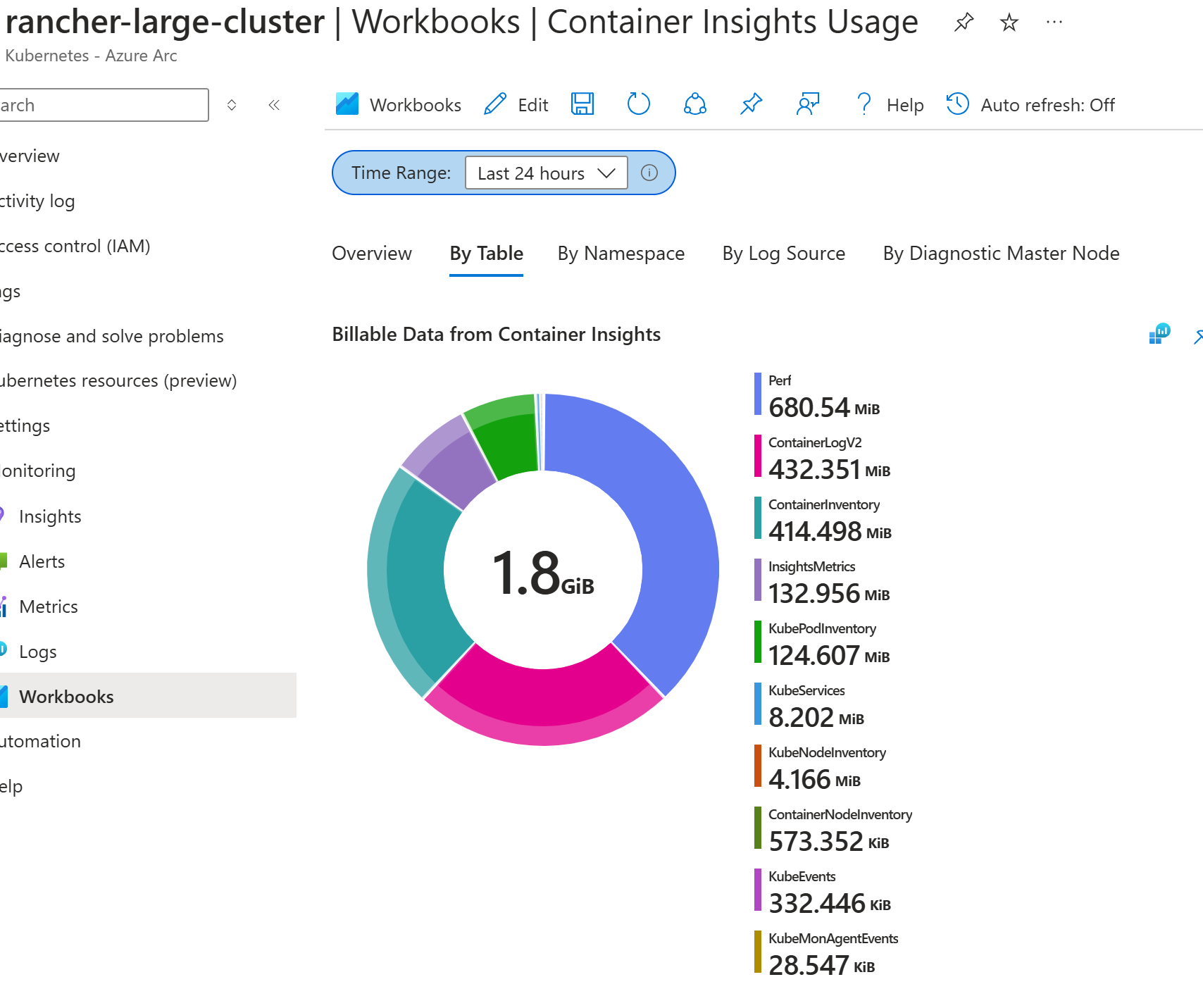

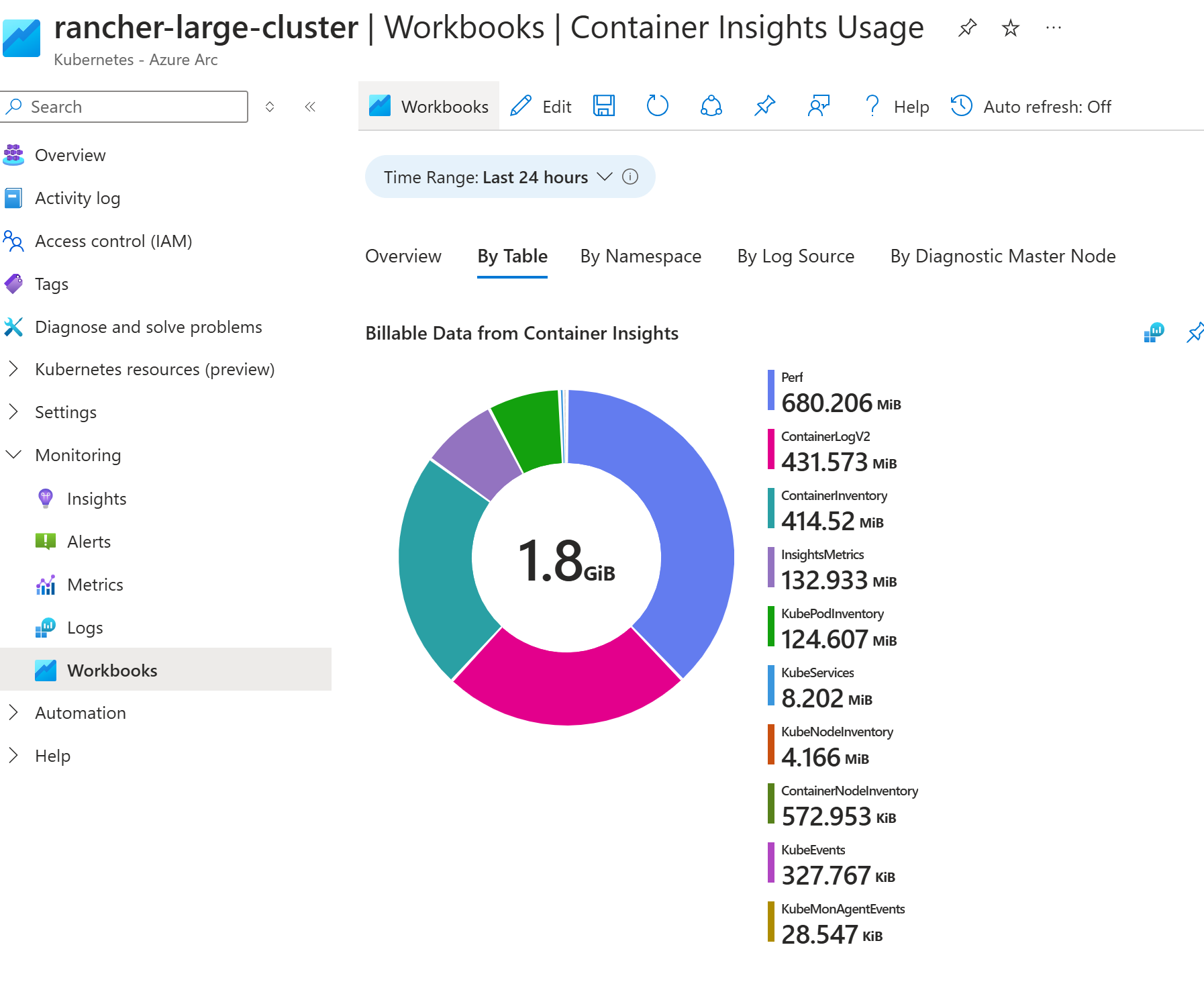

- Container Insights actually shows how much log data is ingested so that you can optimize on cost. For this cluster ~1.8GB is ingested per day into Log Analytics:

- $2.30 per GB

- 1.8 GB * $2.30 * 30 Days = $124.20

- Total: $124.20 per month (this is with no optimizations or namespace filtering)

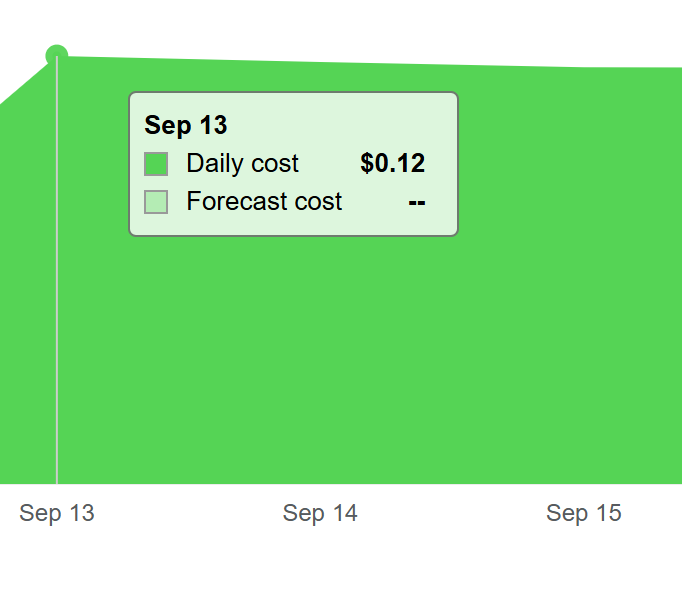

Metrics are much cheaper to store given their smaller size. For this particular cluster, each day is about ~$0.12 to scrape cluster-level metrics every 30 seconds

- $0.12/Day * 30 Days = $3.60 per month

- Total: $3.60 per month

In summary, centralizing metrics and logs into Azure using the defaults for all configurations on this 3-Node cluster costs around $127.80 per month. This is without any optimizations made using the standard default ingestion.

Example from Azure Pricing Calculator for 12-Node Cluster Ingesting 25GB per Day

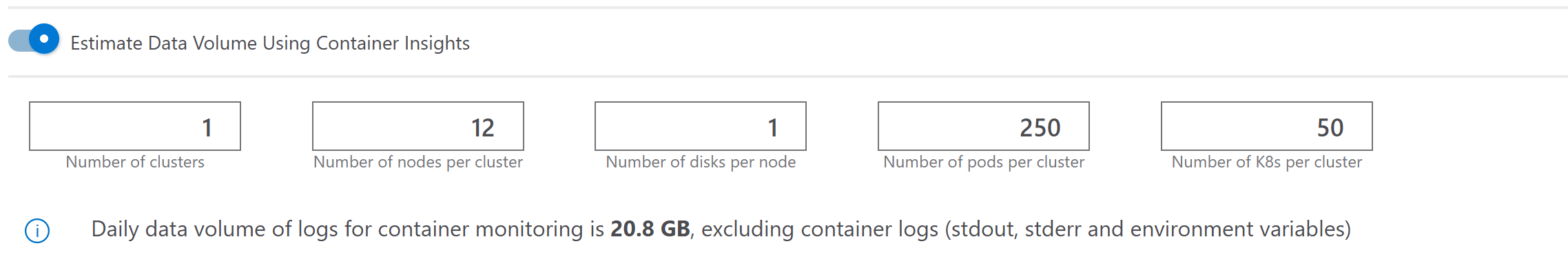

The pricing calculator can provide an estimator tool for given parameters about a cluster. In this case, let's assume we have the following:

- 12-node cluster

- 1 disk per node

- 250 pods

- 50 services (final column is number of services)

Let's also add 5 GB to that value to capture stdout logs being captured by our running pods. As shown in our 3-node rancher cluster, ContainerLogV2 was around 25% of the ingested data:

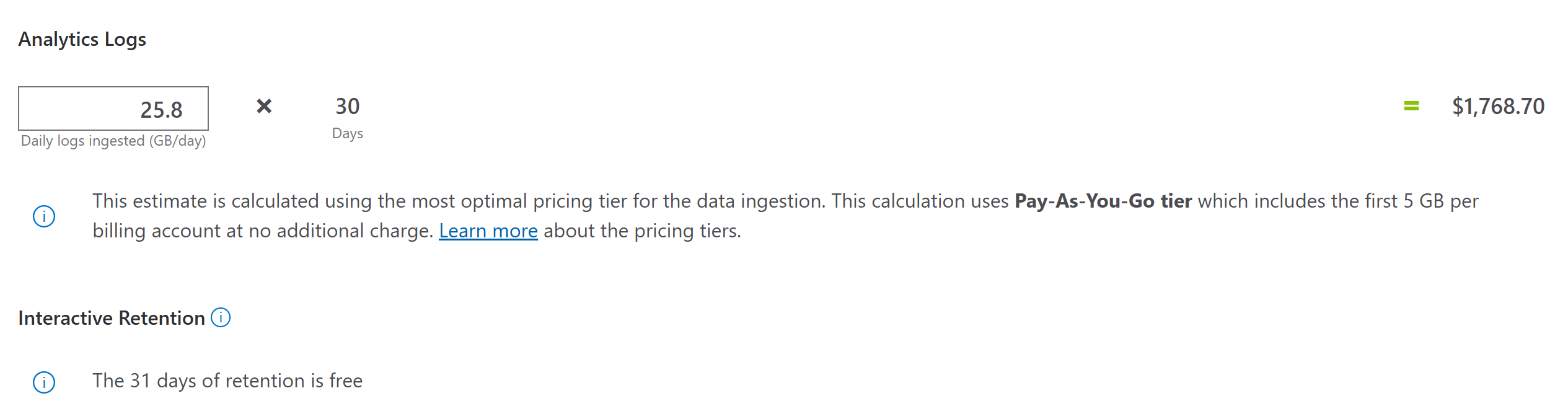

- 20.8 GB + 5 GB = 25.8 GB estimated ingestion

- ~25.8 GB Ingested, $2.30 per GB for 30 Days

- Total: $1,768.70 per month (no optimization)

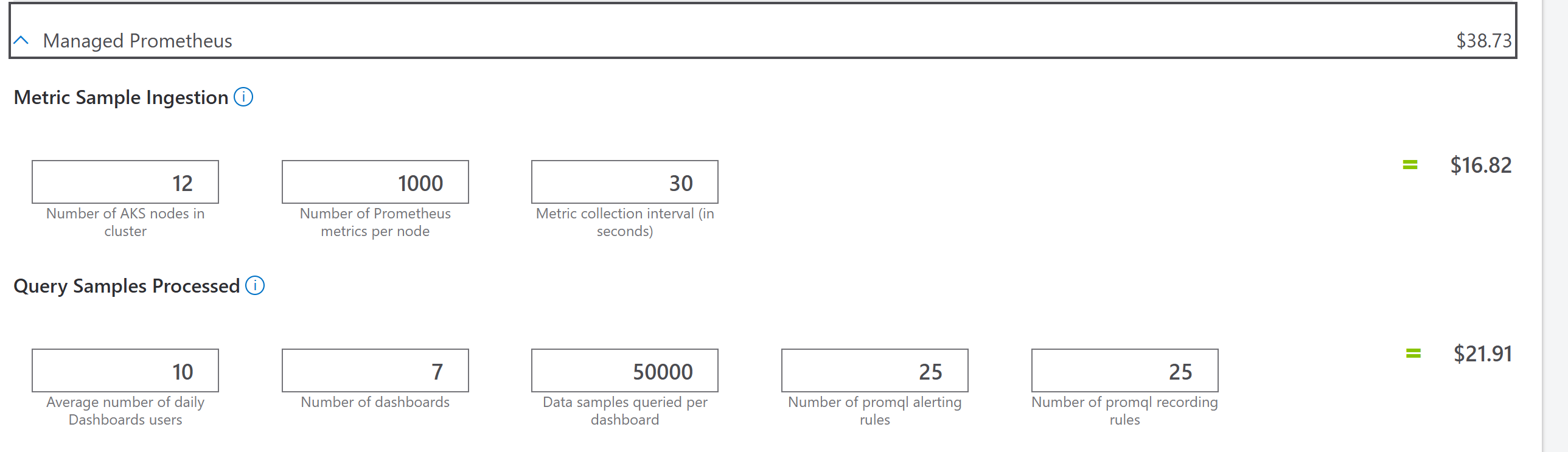

The metric cost is relatively insignificant to this cost, an estimate is shown below:

- Total: $38.73 per month

For a cluster like this, total monthly price would be $1,807.43 per month. Of course this is an estimate, so let's understand how to get real values and optimize on the log cost in particular.

How to Understand Your Log Ingestion and How to Optimize to Save

As shown in the 3-Node Cluster example from above, when you deploy Container Insights you get a workbook called Container Insights Usage that breaks down the ingested data cost and associated tables that are populated.

As you can see, the big tables ingesting data are Perf and ContainerLogV2. Perf contains performance data about the cluster and pods running, and ContainerLogV2 stores logs emitted by containers running in the cluster.

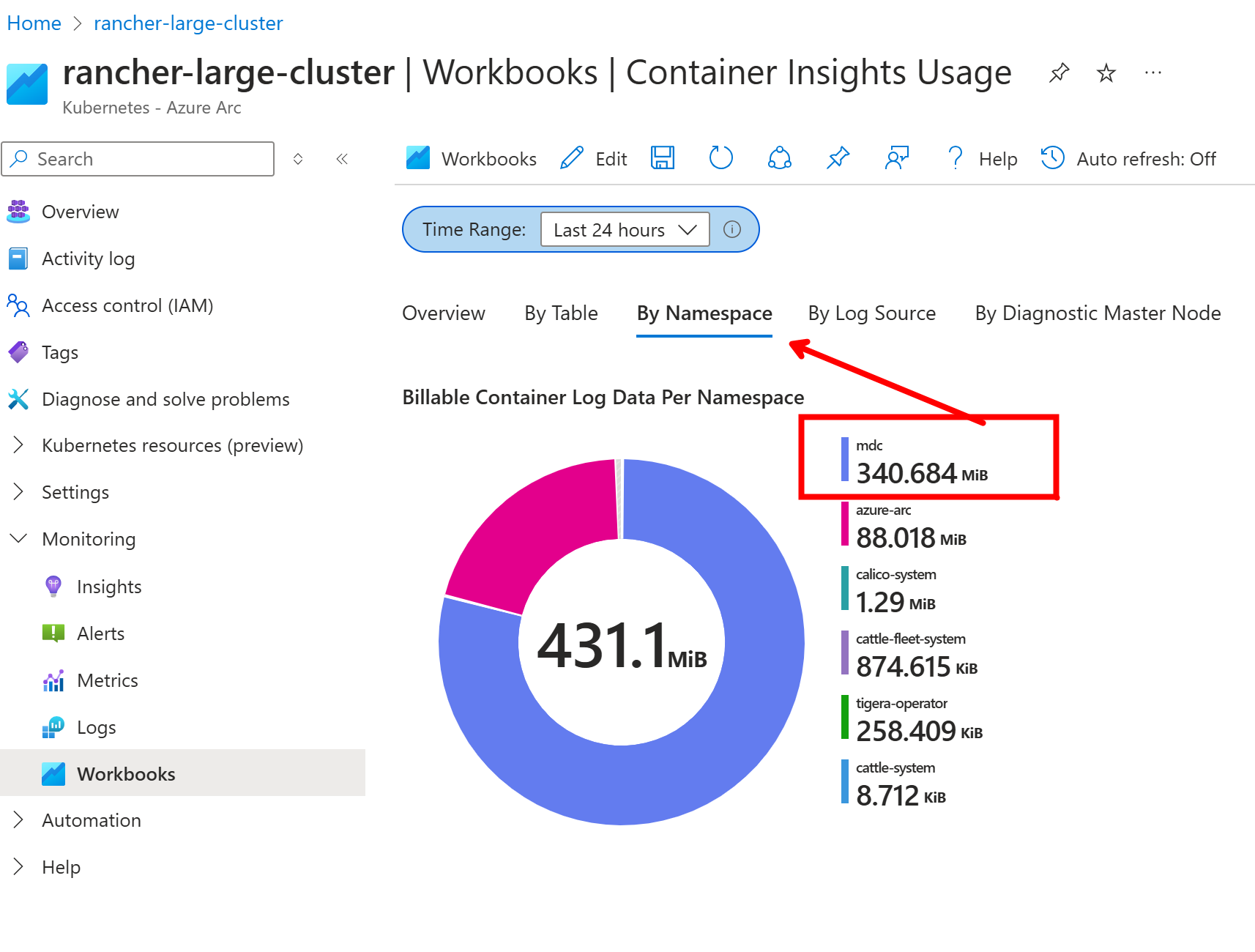

To control costs here, the most effective method is by namespace filtering. First, in the Container Insights usage workbook, we can actually get a breakdown of logs ingested per namespace that are feeding into ContainerLogV2. Notice that effectively all the logs are coming from the mdc namespace (which runs the Defender pods) and the azure-arc namespace (which runs the azure arc agents):

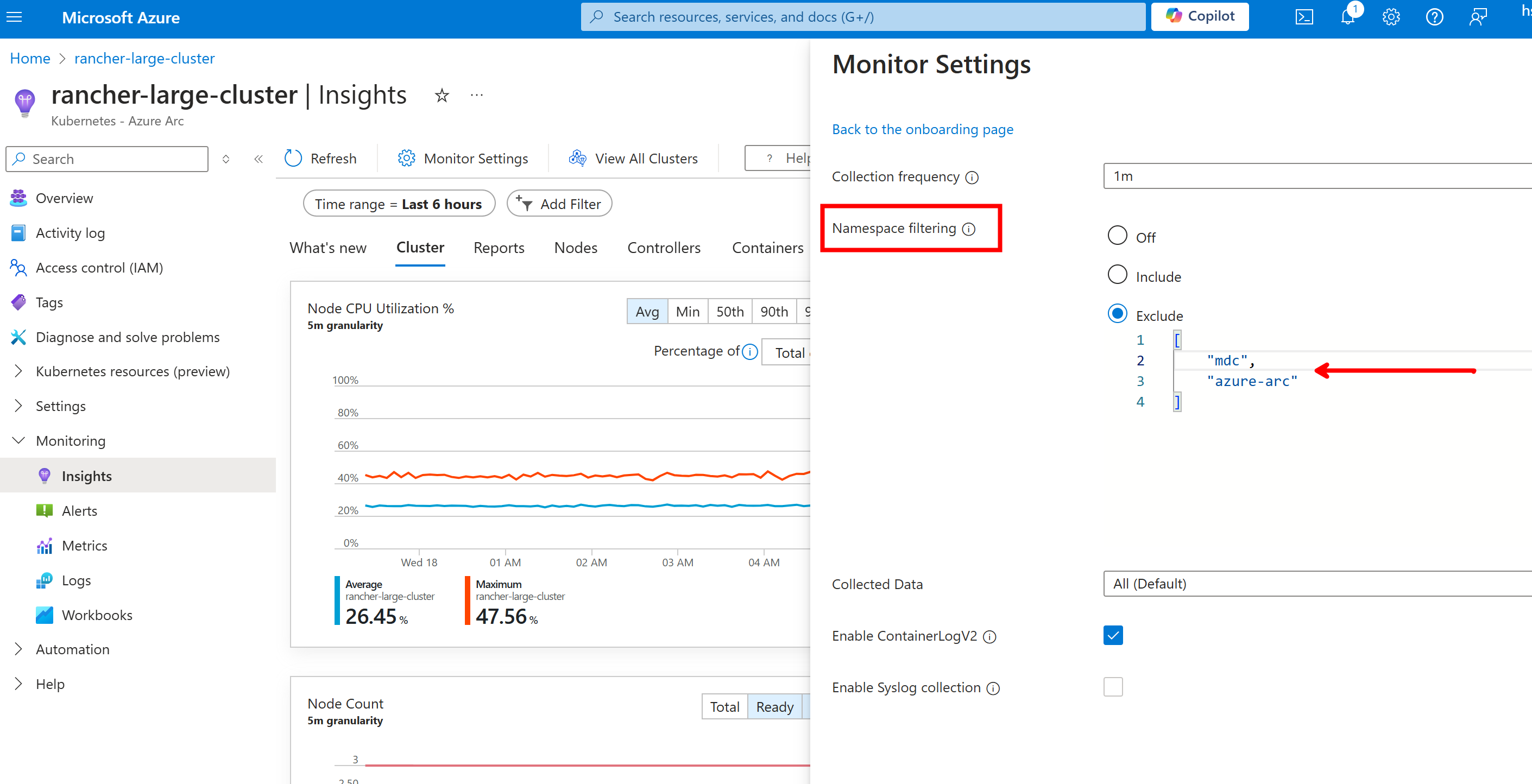

If I want to filter out these namespaces, I can actually do that through the portal or in a config map in the cluster. This will effectively wipe away the 0.5GB I'm ingesting for ContainerLogV2 and cut my cost by 25%:

This is a critical step in optimizing your costs. There are other tactics we can apply to further optimize on costs - one in particular is completely cutting out the Perf log table and relying on the Managed Prometheus and Grafana dashboard visuals, but I normally like to start with namespace filtering since this is an effective and easy mechanism to reduce unnecessary logs.

In summary, the best way to capture what your Container Insights pricing will look like is to enable the extension, have it run for a few days, and closely monitor the ingestion size. From there you can use the information to filter out unneccesary data to optimize.

Keep in mind that pricing is subject to change and everything shown above are based on estimates and examples. The goal from this section is to help you understand how to view the ingestion and how to optimize it for your purposes.

Summary

Azure Arc-enabled Kubernetes is a bridge to managing a cluster through Azure. There are more extensions than the ones described above, but these are the extensions I feel empower platform teams within an enterprise to make real progress on securing, observing, and governing their inventory of Kubernetes clusters.